It all started at College…

I have loved mathematics since I was a boy; it was the only subject I was good at in high school. As a result, I studied Mathematics and Information Engineering at CUHK. My first research experience was my college Final Year Project – Image Upsampling via Tight Frame Transform. In this project, we investigated how to exploit the Tight Frame Space Decomposition to upsample images by embedding the input image as one of the low-dimension spaces. We obtained a sharpened result in the high-dimension space with empirical convergence. However, I did not succeed in mathematically proving the algorithm convergence. The presentation slide of this project is here, and the write-up is here.

From Work to Graduate Study

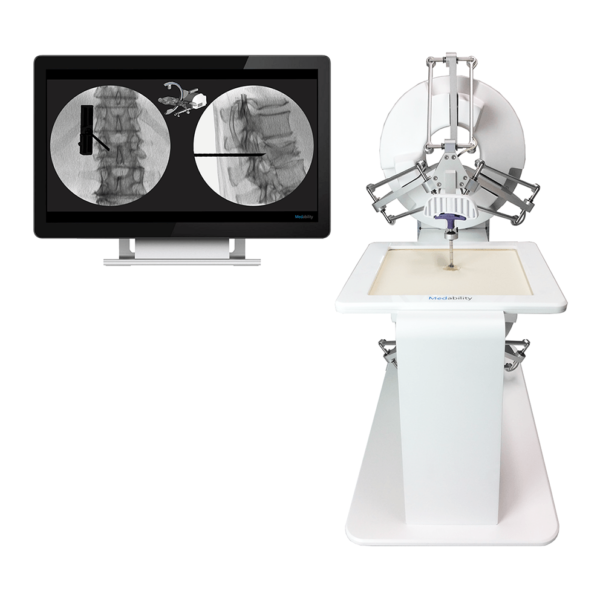

After college, I worked as a Computer Vision Engineer and System Analyst for three years. Having enough savings, I furthered my studies at TUM as a master student in Biomedical Computing. My overseas experience at TUM widened my horizons. It provided me with many opportunities to explore my life and my interests, which I seldom had in HK due to financial constraints and lack of connections. TUM has an open culture and embraces diversity and equality. I was involved in Medical Simulation research at the NARVIS lab, where I helped implement and conduct user studies for medical simulators for a startup, Medability GmbH. At the end of my study, I visited JHU as a research intern to work on the CAMC project at the CAMP lab and finished my master thesis there. Here is the thesis presentation slides. (Image courtesy of Medability GmbH)

During PhD Study

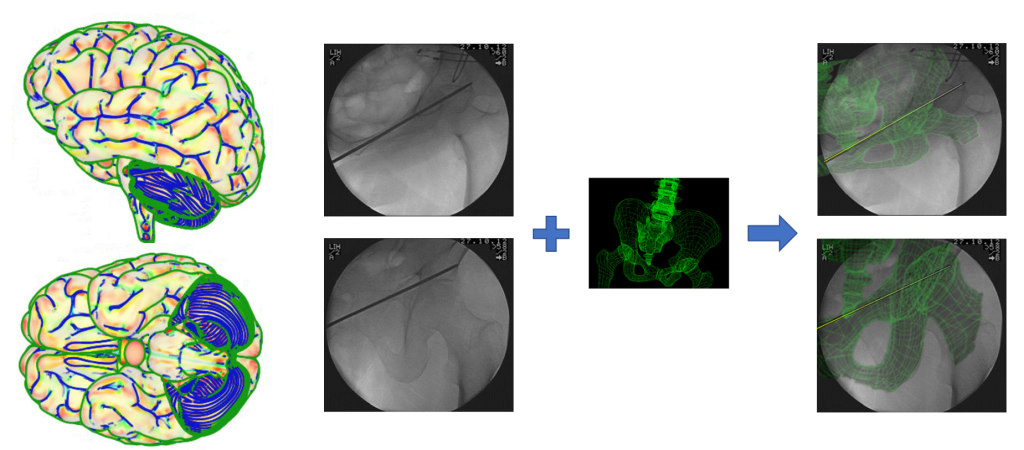

My PhD research began with the 3D-camera-to-medical-device calibration project, supervised by Prof. Dr. Nassir Navab. Registering the camera and medical imaging coordinate systems enables a mixed-reality visualization for surgical operations. Our team conducted a pre-clinical user study to showcase its application in orthopedic surgery, which could reduce radiation usage from over 100 X-rays to less than 5. Later, we integrated surgical tool tracking and developed a mixed reality support system to help surgeons complete the tasks with pre-operative planning. This work won the Outstanding Paper award at the MICCAI workshop AE-CAI 2017. Here is the oral presentation and the poster.

Moving back to surgical simulation, from 2017 to 2018, I worked as a computer graphic and visualization intern at Intuitive Surgical in Sunnyvale. Intuitive innovates minimally invasive surgery using robotic technology (da Vinci Surgical System). At Intuitive, I developed an iOS app to immersively replay recorded endoscopic videos using Google Daydream for surgical training. Then, I researched real-time soft-tissue deformation simulation and implemented and integrated the Position-based Dynamic (PBD) into the in-house visualization system. The primary results show acceptable accuracy, but further improvement is required for clinical use. I did not have enough time to finish the comparison between Co-rotational FEM and extended PBD, which is something that I want to do when I have time.

In the summer of 2019, I visited the ROCS lab at Balgrist Campus in Zurich. During my stay, we established the CAMC system there and invented a radiation-free 3D camera-to-medical-device calibration method by exploiting the rigid motion of mobile C-arm geometry. Assuming we do not translate the C-arm, the arm’s moving space can be described by a 2-sphere. Then, for an object rigidly attached to the arm (a fixed orientation and translation), its moving space can be geometrically described by a torus. Based on this observation, we developed an algorithm to register the camera to the medical device solely using the camera-tracked poses. We evaluated the results under noises and different pose sampling and showed the algorithm’s robustness. We presented this work at SPIE Medical Imaging (slides). However, we omitted the small distortion (mechanical arm bending due to heavy enclosed housing.) It would be interesting to extend this idea to general hand-eye calibration problems and investigate if pre-defined geometry movement obtains better calibration results than the general hand-eye calibration algorithm.

Back at JHU, I spent most of my time TAing augmented reality, computer vision, and computer graphics. Here are some fun HoloLens projects our students did. A car driving game (right) anywhere you want, and Rocket League in HoloLens (middle) using gaze controls and air taps.

I was more involved in Geometry Processing research later in my PhD, attracted by the beauty of mathematics and computational algorithms. Prof. Misha Kazhdan introduced me to the field in his Discrete Differential Geometry and Computation Geometry classes. I fell in love with it and started doing research projects with Prof. Kazhdan. I first explored adaptive Poisson Surface Reconstruction but did not obtain better results. Then, I worked on Shape Correspondences using Prof. Kazhdan’s conformalized mean curvature flow (above left), authalic evolution (middle top), and spherical optical flow (right) to establish dense point-to-point correspondences between genus-zero surfaces (middle bottom). This work was presented at the JHU LCSR Seminar, SPG 2019, and Captial Graphics 2019.

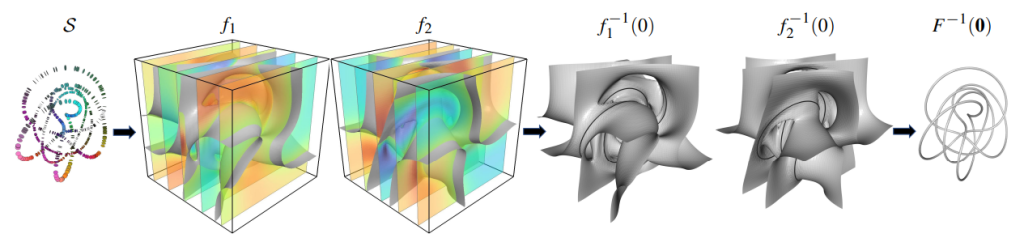

My last project with Prof. Kazhdan was Poisson Manifold Reconstruction. In this work, we generalize Possison Surface Reconstruction (PSR) to higher dimensions using exterior calculus. Following PSR, we reconstruct a higher-dimension manifold by matching the sampled manifold’s normal (S) to the wedge product of the gradients of scalar indicator functions (f1, f2). The intersection of the indicator functions defines the reconstructed manifold (F). This work won the Best Paper award at SGP 2023.

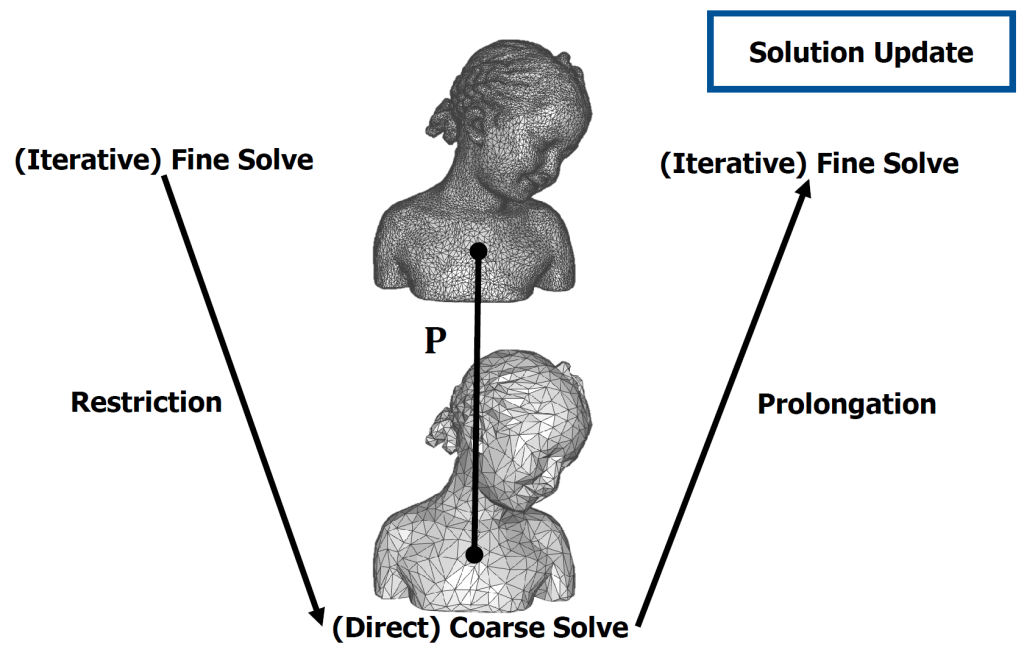

To finish my PhD, I studied Hierarchical Gradient Domain Vector Field Processing using exterior calculus and the multi-grid method (successive subspace method). While the multi-grid method significantly improves the performance of scalar field processing on surfaces, we found that it improves that of vector field processing for small time steps. When the time step is big, the “work” distribution of solving high/low frequencies of the solution in a hierarchical solver is not as optimal as in the scalar case. We mitigated the slow convergence by integrating the Karlov subspace method, but further research is required to bring large-time step vector field processing to real-time. I have presented this work in the JHU Shape Analysis Seminar, Captial Graphics 2023, and, of course, during my dissertation defense.

What my lab is doing now

At Bucknell, I started three research projects. The first one started during MIT Reality Hack 2024 when I brought a student to participate in the contest. I met a team with a common interest in accessibility, and we developed a prototype of AR Limbs. When I started, I considered using muscle sensors to build a game controller, but later, I found it more impactful if that was an accessible controller. This prototype (RECOVR) won several awards at the Hackathon, including a start-up pitch competition (slides). Today, Meta has shown the possibility of sEMG-based XR wristband controllers, but does it work for amputees? In this project, a team of Bucknell students (Clea Ramos, Minh Pham, Neath Ly, TJ Freeman, and Wera Kyaw Kyaw) are designing a prototype and investigating how sEMG-based controllers can help amputees regain their touch in XR or even in the real world.

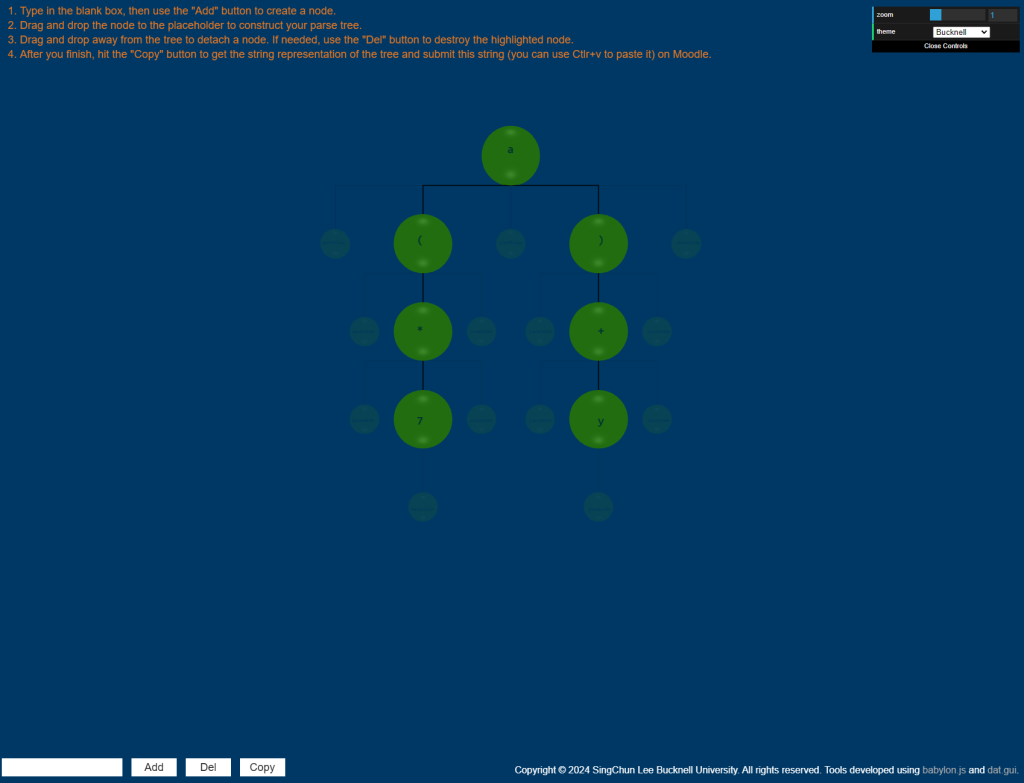

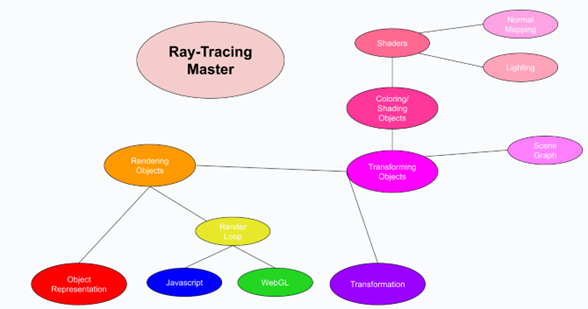

Another project I started investigates how AR may impact CS education and/or teaching computational thinking. While the industry demands more CS professionals, the dropout rate for CS students remains high. One possible reason suggested by constructivism in CS is that freshmen struggle to construct their own models of CS concepts because of a missing related prior model. Ben Khant, Cyrus Kuhn, and Colin Soule are currently designing AR tools to investigate if AR can help students bridge this model gap.

As a gamer, I struggle to find time to play games after becoming a professor. To address this problem, I decided to turn my courses into games. However, this must be done carefully, as we do not want students to learn shallowly. In this project, we research and develop an RPG-like deep gamification. Currently, TJ Freeman is doing an independent study with me to design the framework of the skill trees. If you are interested in designing/making games to help students learn not only with fun but also deeper, come to my office, and we talk.

Besides my research agenda, I also help students to do research they like. Students interested in AR and/or geometry processing come to my office and discuss what they want to study. It usually becomes a PUR and/or an independent study on the topic the student likes exploring. Titus Weng, who started as a PUR last summer, is working on creating a direct sign language to sign language translator for an immersive environment. We submitted and presented his preliminary result to the SVR 2024 Undergraduate Workshop (slides), where he won the Second Best Paper award.

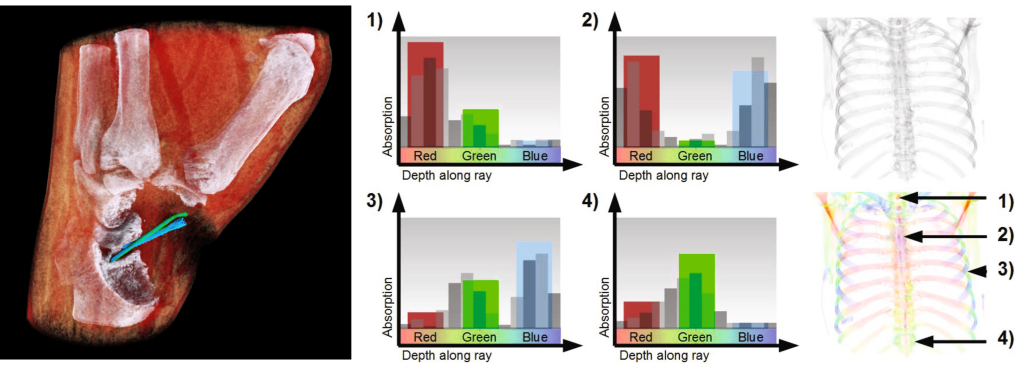

After the summer internship at Medivis, Hung Ngo grew interested in medical visualization. Currently, Hung is working on X-ray image coloring. In 2022, CERN invented colored X-ray using Medipix3 technology. The new chips can detect different wavelengths of the X-ray, enabling separation of different X-ray attenuation, thus allowing coloring. Back in 2008, Aichert et al. developed colored X-rays by registering pre-operative CT data and X-rays and using a colored transfer function to illuminate the X-rays. (Image courtesy of CERN and Aichert et al.) With today’s machine learning technology, can we learn the color mapping from prior data and colorize any other X-rays?

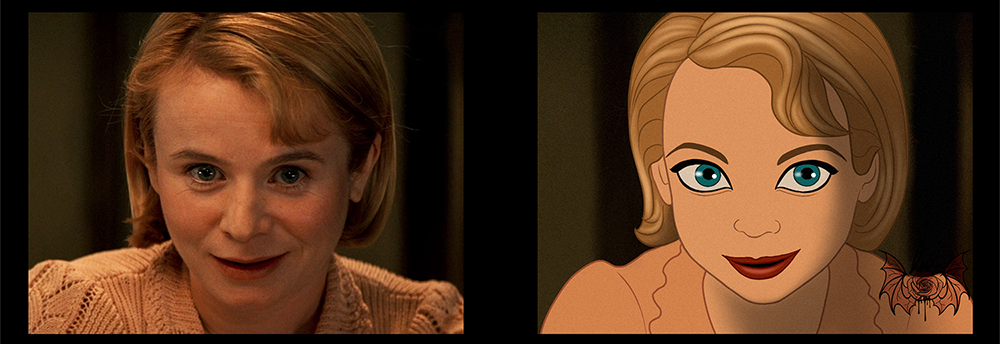

Jameson McParland is working on another fun project – image cartoonization. Many works in this area cartoonize an image by transferring the style. The underlying geometry is unchanged after the cartoonization. Jameson is investigating and developing a new method to cartoonize an image together with the geometry, similar to how an artist will cartoonize a photo, as shown on the left. (Image courtesy of LuxBlack)

The uniformization theorem states that every simply connected Riemann surface is conformally equivalent to a sphere, a plane, or a hyperbolic disk. In the continuous space, a genus-zero Riemann surface can be conformally mapped to a sphere. However, suppose one has different discretizations of the same Riemann surface. Due to the information lost during discretization, the discrete conformal mappings to a sphere of different discretizations do not agree. Hung Pham is investigating how to map different discretizations to the same spherical parameterization conformally using the surface’s intrinsic geometric property.

What I am interested in, but no one is working on…

Line drawing for medical visualization.

Continue the work of my PhD dissertation. Efficient Hierarchical Vector Field Processing.

Mesh compression.

and more to be added ….